Common, that looks pretty fancy! :)

It's made with a public function in my superFreq package, caller plotColourScatter(). It behaves essentially like the base plot() function, but transitions nicely into density plot when the dots start to overlap. It also has bit different defaults, such as dots rather than circles, and colour. There are a few different density plotting functions around, such as hexbin or the built in smoothScatter. They all have different advantages and disadvantages.

Hexbin, as you can see, bins the values and then heatmaps the number of hits in each bin. This solves the issue with overplotting as you can see arbitrarily large numbers of dots in each bin (depending on the colour scale you choose ofc). It also avoids plotting all the dots, which allows small vectorised images for any number of input point. Also hexagons looks so much cooler than squares. They used squares in the 90ies, common, we are past that now. You do lose some in resolution though. You can't easily see if the outer squares contain 1 or 3 dots (unless you gear your colour scale very much in that direction), and you can't see where in the square the dot(s) is. Similarly, you won't pick up on density structures anywhere if they are smaller than your hexagon size. You get a pixelation effect (but with hexagonal pixels, which is cooler). You can just make smaller hexagons though, which mitigates this.

SmoothScatter finds a smoothed density, and then plots the first 100 "low density" points. This is done through some complicated-looking stats that I won't pretend to understand. I think it's clear from the plot above what it does though: it just smears out each point a bit. Here you also don't have to plot all points, so I assume smoothScatter produces decent sized vectorised images. This allows you to better see the structure of the points in the sparse regions, but only for the first 100 dots. You can of course that parameter to see the dot structure in denser regions as well. This comes at the cost of getting a weird-looking transition where the dots are no longer plotted. When you lean back, it almost looks like the density drops a bit just when the dots are no longer plotted. This can be mitigated by plotting fewer (or no) points, so you have to find a compromise here. The smearing also removes structures smaller than the smearing radius.

plotColourScatter actually plots all the points. Then plots them another 3 times, each time with a smaller size, more transparent and with a brighter colour. The first round of plotting picks out the dots and overplots as usual with the default plot() function. The replotting is just barely visible for single dots, but adds up in high density regions. This shows exactly what is going on in the sparse regions, makes a smooth transition into dense regions, and it at the same time very good at picking up small structures in the dense regions with the increasingly small dot sizes. The price is that the output files will be large if you use vector format and have many points. 30000 points (typical for genomics) gives an output file of around 5MB, which is acceptable but can be cumbersome if you have many. If you start going up into many hundreds of thousands or millions of points, you more or less have to rasterise as PNG.

To display what happens when you have smaller structures, let's look at

x = rnorm(10000)^3

y = x + rnorm(10000)^3

the cube on the normal distribution makes it spike at 0, so we will get lines at x=0 and x=y, and a point at the intersection x=y=0:

install.packages('devtools')

library(devtools)

install_github('ChristofferFlensburg/superFreq')

library(superFreq)

It's made with a public function in my superFreq package, caller plotColourScatter(). It behaves essentially like the base plot() function, but transitions nicely into density plot when the dots start to overlap. It also has bit different defaults, such as dots rather than circles, and colour. There are a few different density plotting functions around, such as hexbin or the built in smoothScatter. They all have different advantages and disadvantages.

Hexbin, as you can see, bins the values and then heatmaps the number of hits in each bin. This solves the issue with overplotting as you can see arbitrarily large numbers of dots in each bin (depending on the colour scale you choose ofc). It also avoids plotting all the dots, which allows small vectorised images for any number of input point. Also hexagons looks so much cooler than squares. They used squares in the 90ies, common, we are past that now. You do lose some in resolution though. You can't easily see if the outer squares contain 1 or 3 dots (unless you gear your colour scale very much in that direction), and you can't see where in the square the dot(s) is. Similarly, you won't pick up on density structures anywhere if they are smaller than your hexagon size. You get a pixelation effect (but with hexagonal pixels, which is cooler). You can just make smaller hexagons though, which mitigates this.

SmoothScatter finds a smoothed density, and then plots the first 100 "low density" points. This is done through some complicated-looking stats that I won't pretend to understand. I think it's clear from the plot above what it does though: it just smears out each point a bit. Here you also don't have to plot all points, so I assume smoothScatter produces decent sized vectorised images. This allows you to better see the structure of the points in the sparse regions, but only for the first 100 dots. You can of course that parameter to see the dot structure in denser regions as well. This comes at the cost of getting a weird-looking transition where the dots are no longer plotted. When you lean back, it almost looks like the density drops a bit just when the dots are no longer plotted. This can be mitigated by plotting fewer (or no) points, so you have to find a compromise here. The smearing also removes structures smaller than the smearing radius.

plotColourScatter actually plots all the points. Then plots them another 3 times, each time with a smaller size, more transparent and with a brighter colour. The first round of plotting picks out the dots and overplots as usual with the default plot() function. The replotting is just barely visible for single dots, but adds up in high density regions. This shows exactly what is going on in the sparse regions, makes a smooth transition into dense regions, and it at the same time very good at picking up small structures in the dense regions with the increasingly small dot sizes. The price is that the output files will be large if you use vector format and have many points. 30000 points (typical for genomics) gives an output file of around 5MB, which is acceptable but can be cumbersome if you have many. If you start going up into many hundreds of thousands or millions of points, you more or less have to rasterise as PNG.

To display what happens when you have smaller structures, let's look at

x = rnorm(10000)^3

y = x + rnorm(10000)^3

the cube on the normal distribution makes it spike at 0, so we will get lines at x=0 and x=y, and a point at the intersection x=y=0:

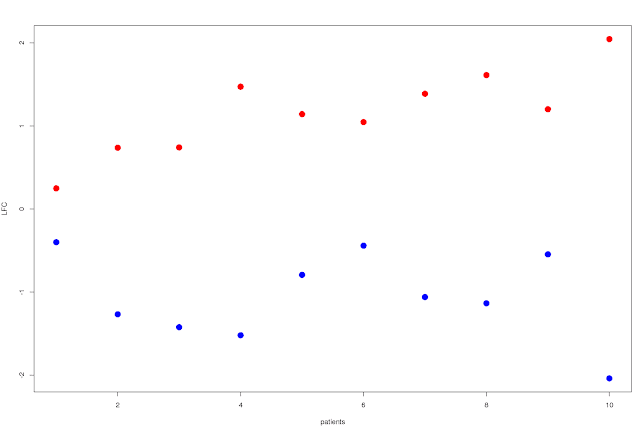

It also allows a natural weighting of the dots. Just change cex if you want some dots to have larger impact that others. This is done in the opening plot at the top, showing GC-content correction. For example, take normal distributions and weight with the sum of the decimal part of the coordinates:

x = rnorm(30000)

y = x + rnorm(30000)

w = x - floor(x) + y - floor(y)

plotColourScatter(x,y, cex=w)

This shows weight of points in both sparse and dense regions, as well as making a smooth transition. Also, plotColourScatter comes with a red colour scheme! :)

plotColourScatter(x,y,col='defaultRed')

So well. I think all three methods presented here works well. They have slightly different limitations and strengths, but in most cases it comes down to preference, and what people find most aesthetically pleasing. In case you like what you see here and want to use it, go install superFreq at https://github.com/ChristofferFlensburg/superFreq and get plotting:

install.packages('devtools')

library(devtools)

install_github('ChristofferFlensburg/superFreq')

library(superFreq)

x = rnorm(30000)

y = x + rnorm(30000)

w = x - floor(x) + y - floor(y)

plotColourScatter(x,y, cex=w)

plotColourScatter(x,y, cex=w, col='defaultRed')

This is done in base graphics, so I assume it can't easily be merged into ggplot, sorry about that all you ggplot buddies. If you really want to though, it assume it wouldn't be much work to do the same thing in ggplot. Either from scratch (just overplot with smaller, brighter transparent dots), or from the pretty short source found around line 700 of https://github.com/ChristofferFlensburg/superFreq/blob/master/R/runDEexon.R (as of version 0.9.15).

If you make some nice plots with this, feel free to share to make me happy. :)